10 Surprising Things That Disappeared from Modern Life

Time doesn’t just pass—it reshapes everything around us in quiet, surprising ways. While we often think of progress as a straight path toward improvement, reality is much messier and far more intriguing. Over the last century, countless things that once defined daily life have either disappeared without a trace or faded into the background, replaced by innovations that seemed impossible not long ago. It’s fascinating to realize how commonplace objects or habits—things our ancestors couldn’t live without—have become nearly mythical relics to us today.

Have you ever wondered what happened to the everyday items people used a hundred years ago? Some vanished because of technological leaps, others simply stopped making sense in a world that moves faster and thinks differently. This shift isn’t just about gadgets or industries—it reflects how we as a society adapt, forget, and reimagine. You might be surprised to learn how many seemingly “permanent” parts of life are now gone, often without anyone noticing.

This isn’t just nostalgia. It’s a peek into the strange mechanics of change—how some things get left behind, and others, often for reasons nobody planned, take their place. If that kind of transformation fascinates you, you’ll love diving into stories about forgotten inventions, vanishing professions, or even the curious fate of once-beloved cultural habits.

What’s most incredible is how quickly something can go from essential to extinct. Maybe, just maybe, the things we depend on today will one day seem just as strange.

10. Before Time Zones: When Minutes Made a Mess

It’s hard to imagine now, but there was a time—not that long ago—when the very concept of standardized time didn’t exist. Today, we take time zones for granted, neatly slicing the planet into organized wedges, each one an hour apart (well, mostly—China still operates on a single time no matter where you are). But before time was regulated, chaos reigned, and even short trips could warp your sense of reality by a few bewildering minutes.

Back in the day, towns told time by the sun—literally. Noon was when the sun was at its highest point in the sky. But the sun doesn’t care about borders or train schedules, which meant every location had its own version of noon. You could leave one town and arrive somewhere just a few miles away, only to discover it was already a few minutes later—or earlier. That sounds small, but the confusion it created was enormous.

Picture this: when it was exactly 12:00 PM in Washington, DC, it was 12:02 in Baltimore, 12:14 in Albany, and a full 12:24 in Boston. The differences may seem trivial now, but back then they were critical for railroad travel. Each train station ran on its own clock, and without a unified system, scheduling was a logistical nightmare. Rail companies had to publish comparative timetables—complex charts that showed what time it was here when it was that time there—just to help passengers guess when the train might actually show up.

This wasn’t just inconvenient—it was dangerous. Missed connections, delays, and even train collisions occurred due to mismatched clocks. It wasn’t until 1883, during the expansion of the U.S. railroad system, that the idea of standard time zones was introduced and eventually adopted nationwide.

Today, our clocks are synced down to the atomic second, but not long ago, a two-minute difference could mean missing your train—or worse. A reminder that even the concept of “now” wasn’t always so simple.

9. Ever Wondered Why White Dog Poop Seems to Have Vanished?

You know, there’s something rather peculiar that many of us have noticed over the years. If you’re old enough, you might remember a time when dog poop, believe it or not, would often turn white. Yeah, I know it sounds a bit strange, but it was a common sight back then. Just think about it – wherever you went, if a dog owner hadn’t bothered to clean up after their furry friend, there it was, a white patch of, well, you know what.

Now, let’s take a trip down memory lane to the 80s and even earlier. Back then, the dog food industry had a rather interesting approach. They used to bulk up their products with a whole bunch of fillers. And these fillers weren’t exactly the most nutritious stuff around. Bone meal was one of those fillers. Basically, it was just calcium in a different form. So, when a dog would eat its food and then digest it, most of that bone meal would simply pass through their system and come out the other end.

Picture this: after the dog had done its business and it was left sitting on the sidewalk, as it dried out, it would transform into this powdery white substance. It was like little ghost turds in the sun! It was quite a sight, albeit a bit gross.

But here’s where things get interesting. Starting in the ’90s, a significant change took place in the dog food industry. Dog food companies realized that they could do better for our four-legged companions. So, they decided to cut back on the bone meal and started adding more fiber and actual nutrients to the dog food. This was a great move as it provided better nutrition for the dogs.

As a result of this change, what we started to see was a decrease in the white leftovers. Nowadays, if someone leaves their dog’s poop lying around (which, by the way, they really shouldn’t), it tends to stay the same color. It’s no longer that powdery white that used to be so common.

Isn’t it fascinating how a simple change in the ingredients of dog food can have such a visible impact on something as mundane as dog poop? It just goes to show that even the smallest changes can sometimes lead to rather interesting outcomes.

The next time you see a dog doing its business and it doesn’t turn into that white powdery mess, you’ll know why. Thanks to the efforts of the dog food industry, we now have a better understanding of what’s good for our furry friends and how it affects their waste.

Dog Food History, Pet Nutrition, Fecal Matter Changes, these are all part of an ongoing story that shows us how far we’ve come in taking care of our pets. And who knows, maybe in the future, we’ll see even more positive changes that will benefit both our pets and the environment.

8. The Surprising History of Public Drinking: From Gross Cups to Modern Fountain

Have you ever wondered how people managed to stay hydrated in public before the invention of water fountains and disposable cups? It’s a fascinating topic that reveals a lot about our past and the evolution of hygiene practices.

In the not-too-distant past, public hydration was a very different experience. Schools and other public places didn’t have the convenient water fountains we’re used to today. Instead, there were water buckets and other large containers that people would dip cups or scoops into to drink. Imagine a scenario where everyone, from students to adults, would drink from the same cup. Yes, you heard that right—a single, shared cup that countless individuals would put their mouths on, leaving behind their saliva and bacteria for the next person. It’s enough to make you cringe, isn’t it?

This practice was not only unhygienic but also posed significant health risks. The spread of diseases through shared drinking vessels was quite common. It wasn’t until the early 1900s that a solution emerged: the disposable cup. These cups, often made of paper, became a game-changer. They were sanitized, used once, and then discarded, eliminating the risk of cross-contamination.

The 1918 influenza epidemic really highlighted the importance of these disposable cups. As the flu spread rapidly, the need for a hygienic way to drink in public became paramount. Disposable cups became an essential item, helping to prevent the spread of the virus. It’s incredible to think that something as simple as a paper cup could have such a significant impact on public health.

Today, we take water fountains and disposable cups for granted. We have the luxury of quenching our thirst without worrying about the cleanliness of shared vessels. But it’s important to remember the challenges people faced before these innovations. The history of public drinking is a testament to human ingenuity and our constant pursuit of health and hygiene.

7. When Graveyards Were the Go-To Picnic Spot

It might sound a bit eerie by today’s standards, but before the rise of public parks, if you wanted to enjoy a peaceful afternoon outdoors with some bread, cheese, and company, your best option was… the cemetery.

Yes, seriously. Long before urban green spaces became part of modern life, graveyards doubled as public gathering places. Back then, parks as we know them were either rare or nonexistent. In England, the vast majority of green land was privately owned until well into the 19th century. And while Boston Common was technically America’s first public park (established in the 1600s), by the year 1800, the entire country only had 16 parks. For most people, especially in growing cities, there just wasn’t anywhere to go for a casual outdoor meal.

Enter the cemetery. Quiet, open, and filled with greenery, they were surprisingly ideal for picnicking. They offered space, serenity, and perhaps unexpectedly, beauty. During the 1800s, the design of cemeteries began to shift—from bleak, overcrowded burial grounds to landscaped “rural cemeteries”, like Mount Auburn Cemetery near Boston, which featured winding paths, trees, and gardens. These spaces were intentionally created to be more than just resting places for the dead—they were meant to be places for the living to visit, reflect, and yes, even relax.

Public health crises and epidemics in the 19th century also made graveyards frequented destinations for families mourning loved ones. But beyond mourning, these visits often turned into social outings, complete with picnics, games, and conversation. It wasn’t unusual to see families gathered on grassy plots, eating lunch near a marble monument, as birds chirped overhead.

Today, the idea might raise some eyebrows, but back then, cemeteries were some of the most accessible and welcoming green spaces around. It’s a reminder that human habits evolve—and sometimes, what seems strange now was once just part of an ordinary day.

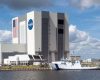

6. Before Passwords: When Computers Had Physical Keys

Today, securing your data means a password, a fingerprint, or even face recognition. But imagine a time when protecting your computer meant using an actual metal key, like the kind you’d use to lock a drawer. Sounds strange? It wasn’t just real—it was the standard.

Back in the early days of personal computing, especially during the era of IBM’s pioneering PCs in the 1980s and early ’90s, machines often came with a physical lock built right into the computer case. Turn the key, and the system would lock—preventing anyone from using the keyboard or tampering with your files. It was a simple but oddly charming kind of security in a time before anyone had heard of multi-factor authentication or firewalls.

These locks weren’t just cosmetic. In office settings or shared environments like schools, you might walk away for a moment—grab a snack, take a break—and come back to find someone messing with your project. The lock helped prevent that. In some cases, it even disabled access to the power button or reset switch, giving users a little peace of mind.

Of course, this being the early tech era, not everything worked perfectly. Not all manufacturers wired their lock mechanisms properly, which meant sometimes the lock did absolutely nothing—just a tiny hole and a tiny key with no purpose beyond the illusion of security. Still, it was a thoughtful touch in an era when digital threats hadn’t yet evolved, but physical access was everything.

It’s fascinating to think how far we’ve come—from turning keys to scanning fingerprints, from plastic keyholes to cloud encryption. But these little locks were a reminder that security has always mattered, even if the tools looked very different.

Want to see what one looked like? Check out an IBM Model 5160, and you’ll spot that old-school lock right near the front—like a vault for your 20MB hard drive.

5. Why Airplane Windows Are Round (and Why That Probably Saved Your Life)

Next time you glance out of an airplane mid-flight, take a moment to notice the round windows. They might look sleek or even stylish, but their shape isn’t just for looks—it’s a matter of life and death.

Back when commercial aviation was still in its infancy, engineers designed planes much like they designed buildings: with square windows. After all, that shape made manufacturing easier and looked familiar. But as planes started flying higher—reaching cruising altitudes around 30,000 feet—something horrifying began to happen. Planes were literally tearing themselves apart in mid-air.

The culprit? The sharp corners of square windows. At high altitude, airplane cabins must be pressurized to keep passengers safe and comfortable. However, pressure doesn’t distribute evenly in a square shape. The corners create stress points where the force of pressurization becomes concentrated—two to three times higher than on the flat surfaces. Eventually, those pressure buildups caused metal fatigue, and windows began to crack, blow out, and in two tragic cases involving the de Havilland Comet, led to catastrophic in-flight breakups and multiple fatalities.

The terrifying realization led engineers to redesign windows into a smooth oval or circular shape, which allows stress to distribute evenly across the frame. The round design eliminates sharp corners, which means there’s no place for the pressure to concentrate and crack. Problem solved—lives saved.

The next time you rest your head on the curved edge of an airplane window, remember: it’s not just a window—it’s a masterpiece of aeronautical engineering, designed to keep you in the sky and not, well, flying out of it.

Funny how something so simple—just the shape of a window—can make such a huge difference between a safe landing and a tragic headline.

4. The Surprising Link Between Cutlery and Overbites: A Look into the Past

You might be surprised to learn that overbites, which affect about 8% of people severely and 20% to some degree, weren’t nearly as common in the past as they are today. What could be the reason for this? Well, you can point the finger at the invention of cutlery, specifically forks and knives.

Anthropologists have dug up some interesting findings. They say that around 250 years ago, almost nobody had an overbite. If you were to look at old skeletons, you’d see evidence of this. People back then had jaws that were well-aligned.

Now, here’s where things get really interesting. Research into the history of overbites has revealed that the Chinese developed overbites about 900 years before Europeans. This seems really strange if you think that overbites are just an evolutionary change, as was previously believed. So, why did the Chinese get overbites 900 years earlier? Well, it turns out that the Chinese started using chopsticks 900 years earlier than Europeans started using cutlery.

Before utensils like forks and knives came into the picture, humans ate with their hands. People would pick up their food and tear off a chunk. This required a lot of work from the jaw muscles—biting, tearing, and chewing. But when forks and knives were introduced, they did half the work for our hands. Cutting food into small pieces and eating them puts less strain on our jaws. Over time, as our jaws became weaker due to less use, overbites started to form.

3. Before Alarm Clocks, You Hired a Human to Wake You Up

We all know the feeling—dragging ourselves out of bed to the relentless beeping of a phone alarm or the subtle buzz of a smartwatch. But imagine a world where none of that existed. No digital assistants, no snooze button, not even a bedside clock. So how did people manage to wake up on time in the old days?

Enter the wonderfully strange profession of the Knocker Upper.

Before mechanical alarm clocks became widespread in the mid-1800s, many workers—especially in industrial towns across Britain and parts of Ireland—had to rely on a living, breathing human being to get them out of bed. The Knocker Upper was that person. Their job? Use a long pole (sometimes with a rubber tip or even a pea shooter!) to tap on upper-story windows in the early morning hours. A few sharp knocks and off they went to the next client.

The system was surprisingly efficient. Workers would pay a small fee each week, and in return, the Knocker Upper made sure they were awake for their shift—no excuses. It was a critical role in communities where factory schedules didn’t wait for latecomers. And get this: in some towns, this quirky profession survived into the 1970s, despite the rise of mechanical alarm clocks and electric gadgets.

Some Knocker Uppers even had unique techniques—like Mary Smith, a famous London Knocker Upper who used a blowpipe and dried peas to ping windows without waking the whole neighborhood. Efficient and discreet.

It’s one of those strange slices of history that makes you wonder: how many modern conveniences do we take for granted? Today, an app might gently nudge you awake with a sunrise simulation. A hundred years ago, it was a stranger with a stick knocking on your second-floor glass.

Makes that morning alarm seem a little less annoying, doesn’t it?

2. The Unlikely Toilet Paper Alternative: Sears Catalogs

In Western culture, toilet paper has become such a common and essential item that it’s hard to imagine life without it. Walk into any bathroom in America, and if you don’t find a roll of toilet paper, you’d probably feel a bit panicked. However, the toilet paper we know today wasn’t always around. In fact, it wasn’t until 1857 that it started to become more regularly available. And here’s the fascinating part – while humans have needed hygiene solutions for millennia, mass-produced toilet paper is a relatively modern invention. So what did people use before it became standard? For many Americans, the answer was the Sears catalog.

For those unfamiliar, Sears, Roebuck and Company was once the largest retailer in America, famous for its massive mail-order catalogs. These weren’t just simple brochures – they were comprehensive shopping guides, sometimes over 500 pages thick, featuring everything from farming equipment to wedding dresses. Households would receive multiple editions each year, and after browsing, many found an alternative use for them in their outhouses.

The catalogs were printed on uncoated, absorbent newsprint, making them surprisingly practical for their unintended purpose. This practice was so widespread that some publications, like the Farmer’s Almanac, began printing special editions with a pre-punched hole in the corner for easy hanging in outdoor toilets. Rural families would often keep a stack of old catalogs near their privies, tearing out pages as needed.

This historical tidbit reveals how creative people were before modern conveniences. What we now take for granted as a bathroom staple was once replaced by something as ordinary as a mail-order catalog. While it might seem unthinkable today, for generations of Americans, the Sears catalog was an essential part of their sanitation routine – proving that necessity truly is the mother of invention.

1. Before Forests Existed, the Earth Was Ruled by Giant Fungi

It’s almost impossible to picture Earth without trees. They surround us, define our landscapes, and support life as we know it. With an estimated three trillion trees, they feel eternal. But what if we told you that long before trees ever reached toward the sky, the planet’s tallest life forms were… massive mushrooms?

Yep, the real OGs of Earth’s skyline were fungi—towering giants that dominated the prehistoric land nearly 400 million years ago.

Known as Prototaxites, these colossal organisms looked like something straight out of a fantasy novel. They could grow up to 24 feet tall, with trunks three feet thick. For reference, that’s taller than your average giraffe and about as wide as a full-grown grizzly bear. And yet, they weren’t trees. They weren’t even plants. They were fungi—ancient life forms that thrived in a world still learning how to grow.

Back then, plants were still small and struggling, mostly low-lying shrubs and mosses. So these monstrous mushrooms didn’t have much competition. Wherever they grew, they towered over the landscape, forming eerie, mushroom-dotted worlds that would seem totally alien to us now.

These fungal forests eventually vanished around 350 million years ago, as the first trees began to rise and change the game. But their legacy is still fascinating—and a little unbelievable. Can you imagine walking through a forest of giant mushrooms instead of oaks or pines?